Guidance Document

Published: December 2024

Introduction

In March 2024 the Council of the College of Veterinarians of Ontario published a Position Statement on the Digital Age and Veterinary Medicine . This Statement aims to encourage innovation within the veterinary community while acknowledging the need to manage risks in an unregulated environment.

The premise of all care provided by a veterinarian to an animal or group of animals is that the licensed practitioner is accountable for their choices and decisions. As new choices with respect to technology and innovation become available, there is an increased need for a veterinarian to have information that helps them assess the risk of use against their ability to reduce or mitigate any potential harm(s).

This companion Guide is intended to help veterinarians adopt medical devices enabled by artificial intelligence (AI) in their practice. It is understood that levels of risk will be present in every decision made. This document is not intended to provide either legal or industry authority on this rapidly emerging and complex topic. More importantly, it creates a specific framework on key considerations that a veterinarian should keep in mind when selecting medical devices enabled by AI to advance care options for patients.

Terminology

Within the artificial intelligence sector there are multiple terms that are applied to the description of the tools being developed and the processes and functions behind them. While it would be preferable to provide a definitive list, there is currently no one source of agreed on definitions – provincially, nationally, and/or internationally.

Foremost, it is important to keep in mind that, other than some medical devices that use forms of energy such as imaging and laser equipment etc., no medical device for use in animal health care has oversight or approval from a regulatory authority such as Health Canada. With this lack of oversight in mind, using Health Canada’s directions for human health care as a guidepost to gain knowledge, expertise and direction appears most logical.

A cornerstone to this discussion is the term ‘medical device’ adopted from the International Medical Device Regulators Forum. While this definition is intended for human medicine, its overall description informs animal health care and is described as follows:

Medical Device: Any instrument, apparatus, implement, machine, appliance, implant, reagent for in vitro use, software, material or other similar or related article, intended by the manufacturer to be used alone or in combination, for human beings, for one or more of the specified medical purpose(s) of

- diagnosis, prevention, monitoring, treatment or alleviation of disease,

- diagnosis, monitoring, treatment, alleviation of, or compensation, for, an injury,

- investigation, replacement, modification, or support of the anatomy, or of a physiological process,

- supporting or sustaining life,

- control of conception,

- cleaning, disinfection or sterilization of medical devices,

- providing information by means of in vitro examination of specimens derived from the human body;

and does not achieve its primary intended action by pharmacological, immunological, or metabolic means, in or on the human body, but which may be assisted in its intended function by such means.

This definition, however, does not address the matter of AI and machine learning in relation to these Devices.

The work on Medical Devices has, therefore, now extended to become Machine Learning – enabled Medical Devices . To keep this simple, and regardless of what type of learning method is used – continuous, semi-supervised or supervised – machine learning integrated with a medical device indicates that data drives the outcomes that are relied upon for decision making by the veterinarian.

Risk Profile

As noted in the Spring/Summer edition of the University of British Columbia Magazine, “AI is only as good as the data that underlies it, and with most of that data collected for commercial purpose to appeal to certain types of customers, the data sets are inherently biased, and certain types of information are privileged over others .”

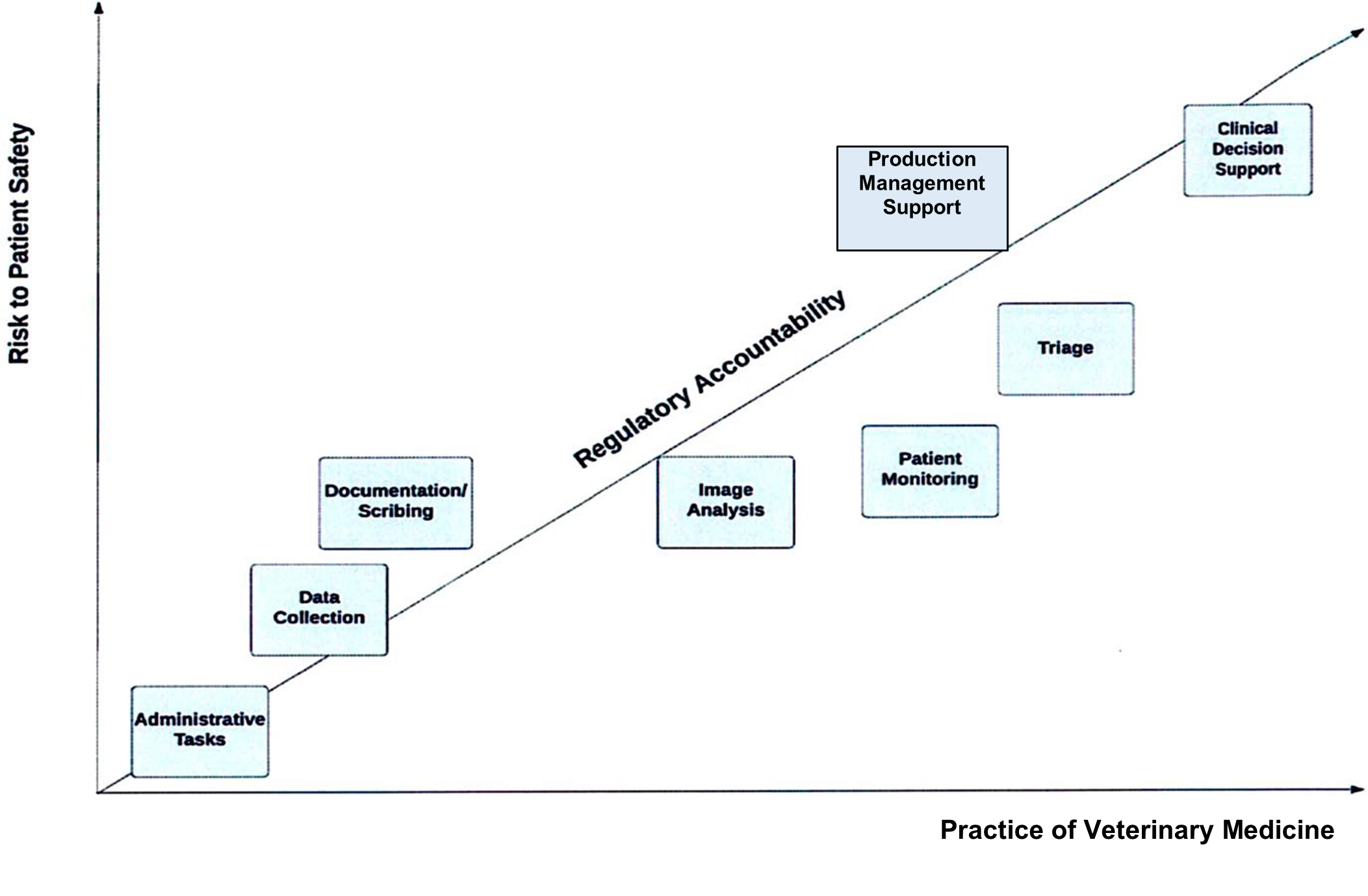

The following chart provides an overview of potential risk to patient safety modeled against emerging medical devices enabled by AI.

Guiding Principles

Similar to the challenge of deciding on common definitions, the publication and public discussion on principles that should guide decision making in the development and use of tools supported by artificial intelligence is wide ranging and audience dependent. To best assist veterinarians in practice, the College is adopting Health Canada’s references, which share as an overarching theme on the need to understand and consider transparency as the foundation on which to make choices for use related to decision-support tools .

The use of AI to assist in the practice of veterinary medicine does not replace the need for the use of clinical reasoning and discretion on the part of the veterinarian. A veterinarian should understand the tools they are using by being knowledgeable about their design, training data used in its development, and the outputs of the tool in order to assess reliability and identify and mitigate bias.

Once a veterinarian chooses to use AI, they accept responsibility for responding appropriately to the AI’s recommendations.

When using transparency as a guiding principle to assist with risk mitigation, the following questions may be of use to a veterinarian in stimulating questions to ask and discern why the selecting machine learning-enabled medical devices will add value to their practice.

- Is the purpose and function of the medical device clear?

- What data set is the device built from?

- What biases may exist in the data and how have they been accounted for?

- Is the medical device relevant to the identified need in the veterinary practice?

- Are the risks and benefits to the care of animals clearly identified?

- Is the data available and easily managed by a veterinarian? The veterinary team?

- Is the medical device updated regularly and in a timely manner?

- Does the medical device add value to the competence of a veterinarian?

- Is the data storage private and secure? Who owns the data? Is its use known and protected?

A possible subset of additional questions a veterinarian could ask a vendor of a machine learning – enabled medical device could include:

- Who was involved in the design?

- What does the AI propose to answer?

- What methods does the AI use to arrive at its outcomes?

- How was the AI trained?

- How was the AI tested?

- How did the model perform when tested?

- How is the performance of the AI being monitored by the vendor to determine any needed changes or improvements?

Without regulatory oversight of medical devices in the veterinary sector, it is important for veterinarians to be thoughtful clinicians as they seek to introduce AI supported tools into practice.

Striving to achieve transparency for the user and the client in the choices made, serves as a solid foundation for balancing benefits over risks or harms.

Resources and Supports

As veterinarians move their facilities and their teams forward, inclusive of introducing Machine Learning – enabled Medical Devices, seeking opportunities to gain knowledge and skill in the selection and implementation of these tools will be critical to success.

Trusted sources for guidance will continue to include the College of Veterinarians of Ontario, and the Canadian Veterinary Medical Association. New groups equipped to debate and recommend medical devices for use in the veterinary sector will likely emerge and will be important to watch.

Conclusion

The incorporation of AI into veterinary practice is inevitable and presents tremendous potential benefits to patients and veterinarians alike. It also presents significant risk of harm if such tools are developed and used irresponsibly. Veterinarians have always had the responsibility to make choices and decisions that are ethical, reasoned and display good judgement on behalf of the animals and clients they serve. Adherence to the traditional professional expectation to do no harm continues to remain forefront in the evolution of medical devices enabled by artificial intelligence.

References

1. College of Veterinarians of Ontario Position Statement (2024). Embracing Innovation and the Digital Age in Veterinary Medicine.

2. Federation of State Medical Boards (April 2024). Navigating the Responsible and Ethical Incorporation of Artificial Intelligence into Clinical Practice.

3. Government of Canada (May 2024). Guiding Principles for the use of AI in Government.

4. Government of Ontario (September 2023). Principles for Ethical Use of AI (Beta).

5. Government of Ontario (April 2024). Ontario’s Trustworthy Artificial Intelligence (AI) Framework.

6. Health Canada (October 2021). Good Machine Learning Practice for Medical Device Development Guiding Principles.

7. Health Canada (2021). Transparency for Machine Learning – Enabled Medical Devices: Guiding Principles.

8. Health Canada (September 2023). Draft Guidance Document: Pre-market guidance for machine learning – enabled medical devices.

9. Innovation Science and Economic Development Canada (2019). Canada’s Digital Charter in Action: A Plan by Canadians for Canadians.

10. International Medical Device Regulators Forum (May 2022). Machine Learning – enabled Medical Devices: Key Terms and Definitions. Artificial Intelligence Medical Devices (AIMD) Working Group.

11. Privacy Commissioner of Ontario (February 2024). Artificial Intelligence in the Public Sector: Building trust now and for the future. Commissioners Blog.

12. University of British Columbia Magazine (Spring Summer 2024). AI for Social Good: Code of Ethics, pages 13 – 16.

13. U.S. Food and Drug Administration (January 2021). Artificial Intelligence / Machine Learning – Based Software as a Medical Device (SaMD).

14. U.S. Food and Drug Administration (March 2024). Artificial Intelligence and Medical Products.